You are viewing the RapidMiner Hub documentation for version 2024.0 - Check here for latest version

High-availability of Altair AI Hub Server

Altair AI Hub is capable of being run in high-availability mode, where high-availability describes how well a system provides useful resources over a set period of time. High-availability guarantees an absolute degree of functional continuity within a time window expressed as the relationship between uptime and downtime. Uptime and availability don't mean the same. A system may be up for a complete measuring period, but may be unavailable due to network outages or downtime in related support systems. Downtime and unavailability are synonymous.

High-availability setups can differ from case by case. The setup heavily depends on your requirements what you like to achieve and guarantee for individual applications included or where you can accept unavailability. Which applications to put into high-availability mode is up to you and depends on your requirements and expectations. This page outlines requirements of AI Hub components and gives conceptual instructions which application should probably be set into high-availability mode.

As a first step, please read through the following sections carefully, then extract requirements and goals for your setup. The outlined requirements and expected behavior should give you a guideline on how to get started with your setup.

Please keep in mind, that high-availability mode isn’t a backup solution, neither is it a way to achieve scalability.

Deployment

It's recommended to set up high-availability mode with the kubernetes deployment approach. AI Hub's kubernetes service definitions are designed to be served and scaled up easily. In addition, the use of persistent volumes, makes it also easier to have a distributed file system in place (required by AI Hub Server backend) for different nodes.

Setting up high-availability mode for docker-compose deployment approach is possible, but requires more manual work like defining an overarching load balancer to route traffic to individual instances. HAProxy is a common choice.

Configuration for applications

The following sections outline an overview what is required to get a high-availability mode enabled for each individual AI Hub component, focusing on the Server core components like the backend, frontend and Web API Gateway. 3rd party applications like JupyterLab, Grafana, Keycloak

AI Hub Server backend and frontend

AI Hub Server backend and frontend can be put into high-availability mode. Independent of the necessary 3rd party applications being put into high-availability mode themselves.

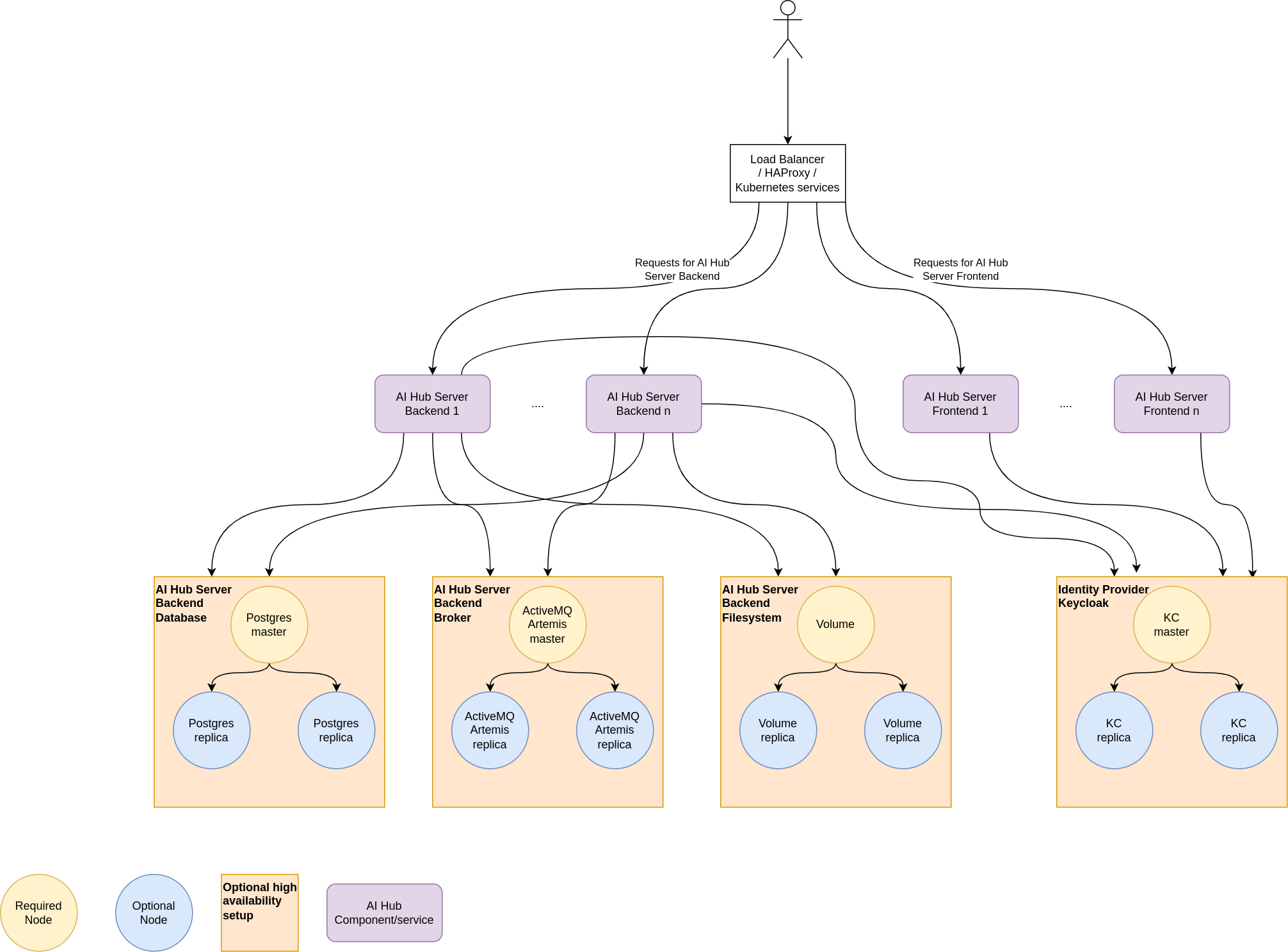

The following image illustrates a potential setup with one to n AI Hub Server backends and AI Hub Server frontends being served by the Kubernetes services or a manually designed proxy in front.

Please note, that required 3rd party applications do not need to be in high-availability mode themselves, but this increases the guarantee of AI Hub Server backend to operate, even if a node for ActiveMQ Artemis is unavailable.

The following table illustrates high-level requirements for AI Hub Server backend application and should serve as a starter what is required it into high-availability mode.

| 3rd Party Application | References for high-availability mode | Access requirement |

|---|---|---|

| Database (Postgres) | Postgres documentation | Yes. All backend instances need to be able to connect. |

| Identity Provider (Keycloak) | Keycloak documentation | Yes. All backend and frontend instances need to be able to connect. |

| Message Broker (ActiveMQ Artemis) | See below section and ActiveMQ Artemis documentation | Yes. All backend instances need to be able to connect. |

| Shared file system: AI Hub Server Home Directory | Depends on the solution you've selected (NFS, CEPH) | Yes. All backend instances need to be able to have read and write. |

In order to put AI Hub Server backend into high-availability mode, the component itself needs some configuration tweaks:

- Ensure to enable the application profile

clusteredby settingSPRING_PROFILES_ACTIVE=clusteredfor all AI Hub Server backend instances and point to the very same database service - Ensure to list all instances of AI Hub Server backend instances (their reachable domains/IP addresses) for proper service discovery for Endpoints in AI Hub Server backend:

- Set peer hostname with

EUREKA_INSTANCE_HOSTNAME=peer1 - Adapt services URLs to be a list with

EUREKA_CLIENT_SERVICE_URL_DEFAULT_ZONE=https://registryUser:registryPassword@peer1/eureka/,https://registryUser:registryPassword@peer2/eureka/ - Change accordingly for all instances (referred to as

peer1andpeer2here)

No configuration changes to the AI Hub Server frontend are required.

AI Hub Web API Gateway

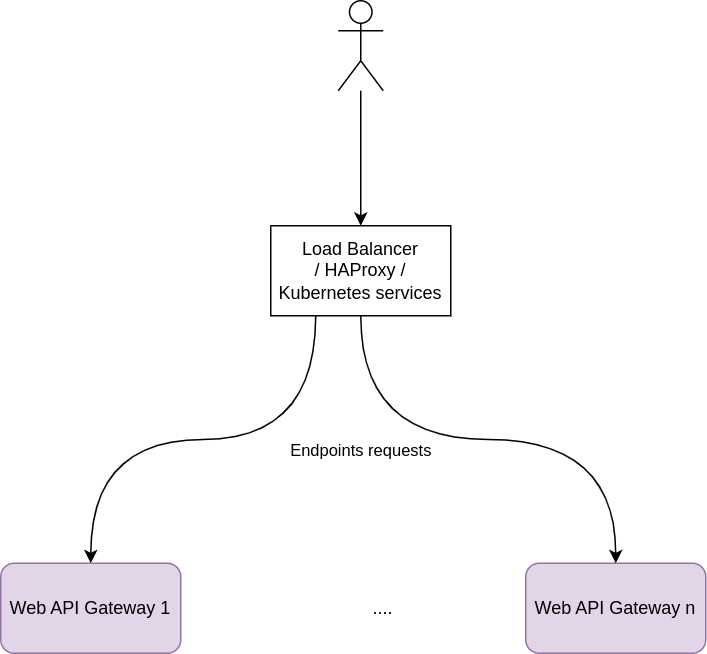

The Gateway component of AI Hub is a stateless component in the sense that it queries available Web API Agents from all available AI Hub Server backend for auto discovery. It's optional to put this component into high-availability mode, although recommended to do so.

For the Web API Gateway to properly query all known instances of the auto discovery for Web API Agents, adapt the

services URLs to be a list with EUREKA_CLIENT_SERVICE_URL_DEFAULT_ZONE=https://registryUser:registryPassword@peer1/eureka/,https://registryUser:registryPassword@peer2/eureka/.

AI Hub Web API Agents

Web API Agents serve Endpoints and are routed to by the Web API Gateway. In addition, they register themselves to AI Hub Server backend, thus they need to know all instances for the auto discovery.

In a high-availability setup, please adapt their services URLs configuration to be a list with EUREKA_CLIENT_SERVICE_URL_DEFAULT_ZONE=https://registryUser:registryPassword@peer1/eureka/,https://registryUser:registryPassword@peer2/eureka/.

AI Hub Job Agents

Job Agents are connected to a Queue and execute processes. The Queue is backed up an ActiveMQ Artemis queue, so enabling high-availability mode for the message broker is highly recommended for operation continuity.

If you like to ensure that processes are executable, add more Job Agents to the specific Queue.

ActiveMQ Artemis

The ActiveMQ Artemis distribution allows to enable high-availability mode by placing drop-in configuration

snippets into /var/lib/artemis/etc-override and naming them broker-01.xml and so on. By going into the distribution

container, you can see all overrides already present in that directory. Please make sure, that you do not have the same

file names as default overrides and that you make your changes persistent by assigning a volume file mount or similar

to it. In addition, they're processed in order, so 00 is merged before 01. The merging is done with the help of

XSLT tranformations.

Please refer to the official ActiveMQ Artemis high-availability documentation which configuration settings are required.

Scoring Agents

High-availability mode for Scoring Agent is not recommended. Please switch to Web API Agents which are their managed counter-part and support high-availability mode out of the box.

Scoring Agents are not meant to be put into high-availability mode, although, with manual tweaking and work, it can be achieved.

Although not recommend, you still like to put them into some kind of manual high-availability mode, please pay attention to the following constraints when doing so:

- Ensure the

deploymentsare synchronized, but the Scoring Agent instances still use different home directories - Add a load balancer on top of all your Scoring Agents.