You are viewing the RapidMiner Hub documentation for version 2024.0 - Check here for latest version

Scalable architecture

The original version of this document was written before Altair AI Hub (then called RapidMiner Server) was reconfigured as a set of Docker images. Here we have only slightly modified the text, ignoring many of the peripheral components that were introduced later, in order to better concentrate on the heart of the system.

The design

The design of the AI Hub Server environment reflects a typical data science workflow, where there are two kinds of activities:

Model building, involving long-running processes that can be placed on a queue and run asynchronously

AI Hub Server offers a queue system for long-running jobs, which are executed externally via Job Agents. You increase processing power by adding Job Agents.

Prediction, or any other application of the models, where the need for a real-time response is paramount

There are two engines for generating predictions:

- Web API Agents, managed, load balanced entities that can be run externally or internally

- Scoring Agents, external entities that run independently of AI Hub

You increase processing power by adding Scoring Agents or Web API Agents.

Typically, you will use Altair AI Studio to design the processes you will run in the Altair AI Hub environment.

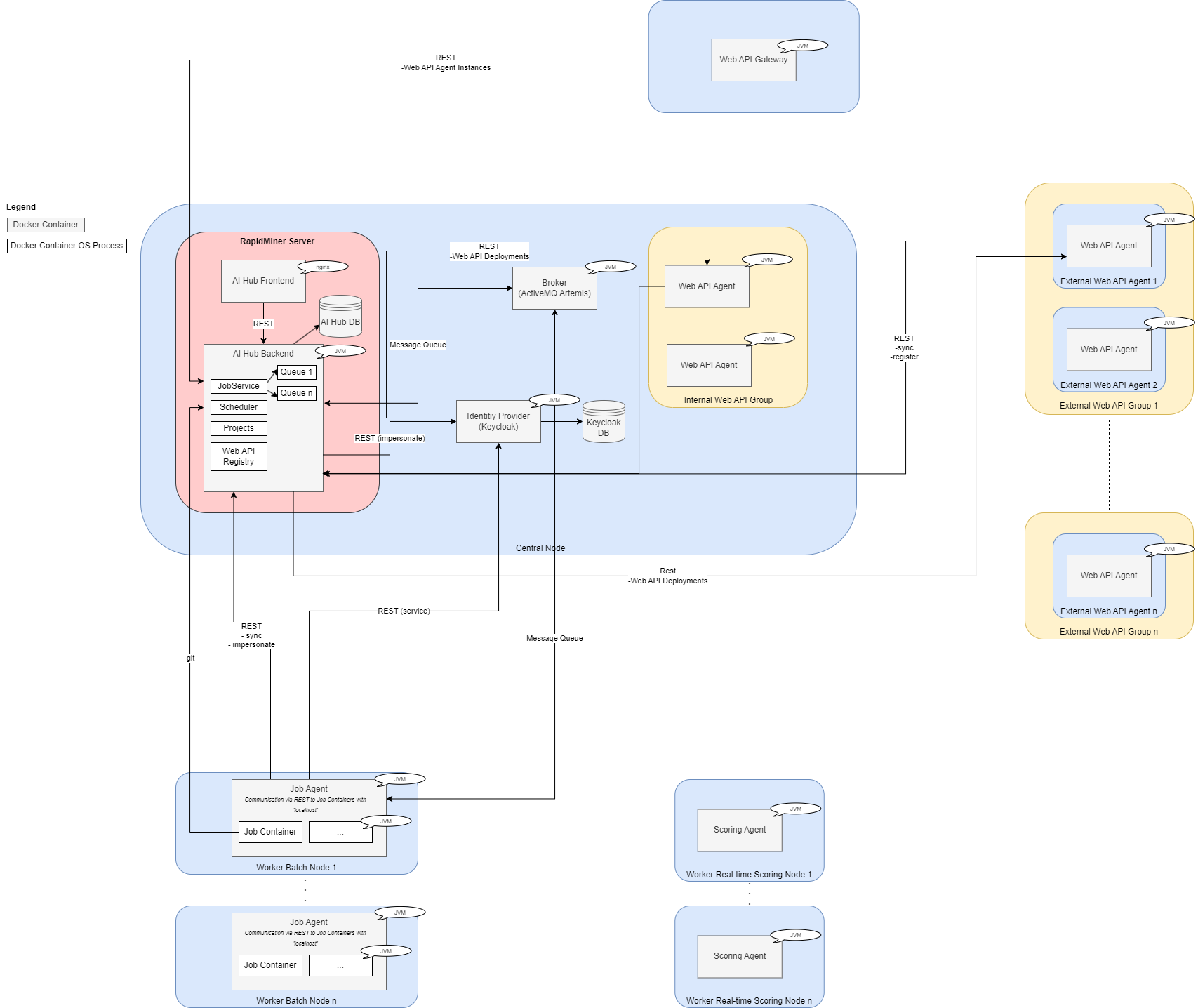

In the diagram below, each blue box represents a separate machine.

- AI Hub Server is installed on the big blue box on top.

- Remote Job Agents are hosted on the blue boxes at the bottom.

- Scoring Agents function independently, on the side

- The Web API Agents can reside on the AI Hub Server Node or standalone

- The Web API Gateway can be on the AI Hub Server Node or standalone

AI Hub Server

AI Hub Server is the central component in the architecture. You interact with it via a web interface or via Altair AI Studio. Its main responsibilities are:

- User, queue, and permissions management

- Scheduling of user jobs (processes)

- Execution of processes running on the local Job Agent, if it exists

- Project management (storage of models, processes, etc. and permissions for them)

- Connection management (DB, Hadoop/Radoop, etc.)

Read more: Install Altair AI Hub

Job Agent

The design with Job Agents running remotely on dedicated machines is aimed at scalability.

Each Job Agent is configured to point to one of the queues on AI Hub Server. Its only responsibility is to pick up jobs from the queue and run them. When started, the Job Agent spawns a configurable amount of Job Containers as separate system processes. Jobs are then redirected to those Job Containers and Altair RapidMiner processes are executed within the spawned Job Containers. When the Job Agent is shut down, all Job Containers of this Job Agent are also shut down. For each Job Agent, the number of Job Containers that can be spawned and the available memory is configurable.

Multiple Job Agents can point to the same queue. You can manage the queues, and therefore the allocation of resources, by assigning permissions.

Read more: configure Job Agents, configure Job Containers

Job Container

The Job Container spawned by the Job Agent runs an Altair AI Studio instance that is capable of executing an Altair RapidMiner process. It's bound to a system port in order to accept jobs from the Job Agent via REST API.

By default, the Job Container will not terminate after each process, therefore providing a nearly instant execution of processes.

It might be desirable to run each job in its own sandbox so that the system is more robust and jobs won't have any effect on previously run jobs. This behavior can also be configured, e.g. to restart the Job Container after a process finished. Please refer to the Job Agent's configuration page for further details.

The price of this safety is latency -- the latency in restarting the Job Container is measured in seconds. Each job will then have this additional latency.

If a real-time response is paramount, we recommend using a Web API agent or a Scoring Agent. For example, you might build a model in a Job Container and generate predictions for that model via a Scoring Agent.

Web API Agent / Scoring Agent

As mentioned previously, there are two engines for generating predictions:

- Web API Agents - communicate with Altair AI Hub

- Scoring Agents - run independently of Altair AI Hub

When generating predictions via the Scoring Agent, you need Altair AI Hub to create an endpoint, but after that you may choose to run your processes independently of Altair AI Hub.

The Web API architecture is designed to offer the same level of management and services for Web API agents as provided by Altair AI Hub for the Job Agents, with the Web API Group taking the role of the queue.

As the table below makes clear, the Web API Agent / Scoring Agent is the scalable, low-latency counterpart to the Job Agent / Job Container.

| Component | scalability | low-latency response | instant execution |

|---|---|---|---|

| Job Agent / Job Container | |

|

|

| Scoring Agent | |

|

|

| Web API Agent | |

|

|

Read more:

Default ports and communication paths

The following table outlines internally used default port(s) by the AI Hub platform. In an actual deployment like the docker-compose setup, some of those ports are routed through a reverse proxy like nginx which serves as sole entry point when communicating from Altair AI Studio or any other third-party application with AI Hub. In such scenarios, those ports are not exposed by default or are set up to be in an internal network only.

| Application | Default service port(s) | Default optional/debug/metric port(s) | REST communication | Broker communication |

|---|---|---|---|---|

| ActiveMQ Artemis (Broker) | 61616 | 8161 | - | AI Hub Backend, Job Agent |

| AI Hub Backend | 8080 | 8077 | - | Job Agent |

| AI Hub Frontend | 80 | - | AI Hub Backend | - |

| Job Agent | - | 8066 | AI Hub Backend, Job Container (via localhost) | AI Hub Backend |

| Job Container | 10000... (localhost listen on Job Agent) | - | AI Hub Backend, Job Agent | - |

| Scoring Agent | 8090 | 8067 | - | - |

| Web API Agent | 8090 | 8067 | AI Hub Backend, Web API Gateway | - |

| Web API Gateway | 8099 | 8078 | AI Hub Backend, Web API Agent | - |

| License Proxy | 9898 | 9191 | Altair License Manager, AI Hub Backend, Job Agent, Web API Agent, Scoring Agent (depends on configuration) | - |