You are viewing the RapidMiner Radoop documentation for version 2024.0 - Check here for latest version

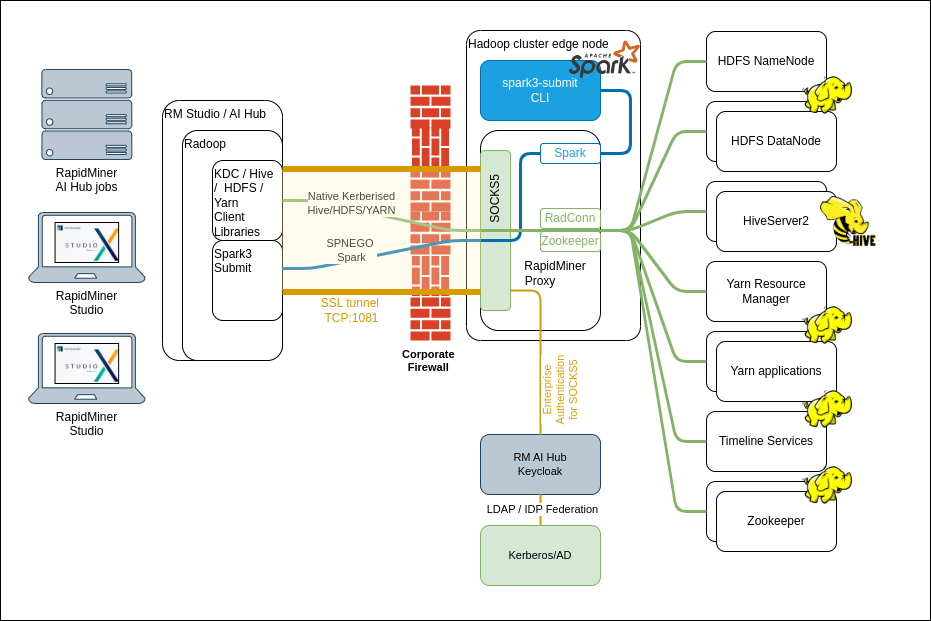

Hadoop Cluster Networking Overview

The data stored in a Hadoop cluster is often confidential, so it is important to ensure that your data is safe from unauthorized access. Many companies decide to deploy the Hadoop cluster to a separate network, behind firewalls. The sections below provide a few suggested ways to make sure that Radoop can connect to these clusters.

Note: You must have a fully functioning Hadoop cluster before implementing Radoop. Hadoop cluster administrators can use the following tips and tricks, which are provided only as helpful suggestions and are not intended as supported features.

Networking with Radoop Proxy

Radoop Proxy makes the networking setup significantly simpler: only one port needs to be opened on the firewall for the Radoop client to access a Hadoop cluster. See the table below for details.

| Default Port # | Notes |

|---|---|

| 1081 | This port is used by the Radoop Proxy and is configured during Radoop Proxy installation |

If the cluster is secured using Kerberos, you will need to configure your local Kerberos client to use TCP communication only. You can achieve that by adding udp_preference_limit = 1 to the client side kerberos configuration file.

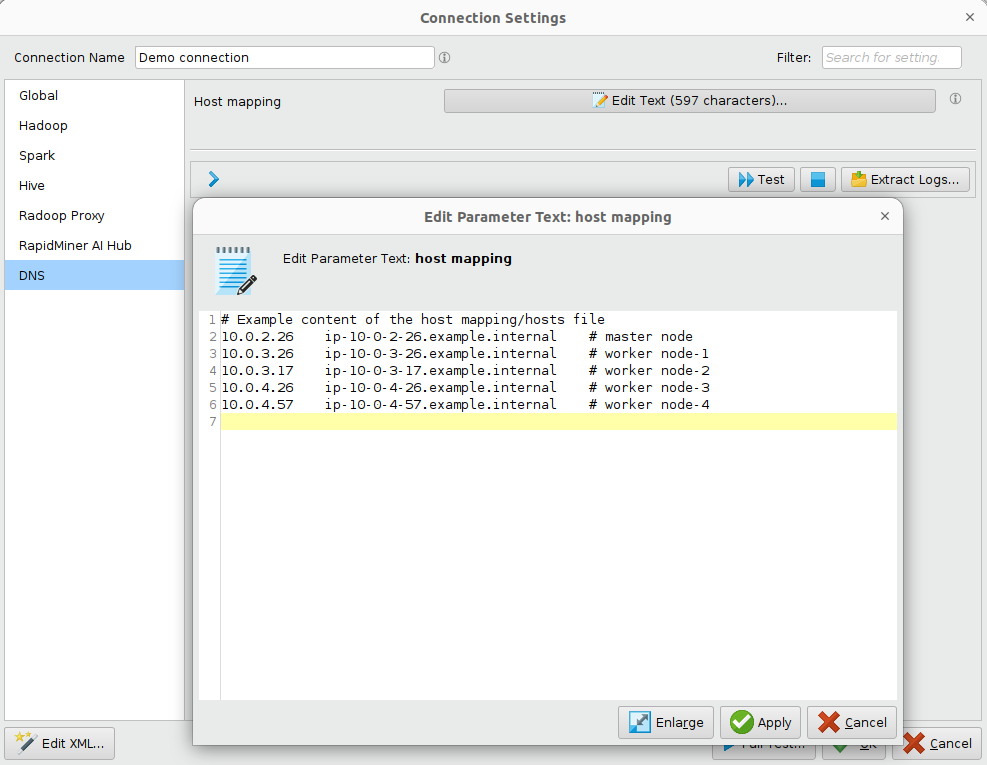

In Hadoop clusters, DNS and reverse DNS lookups are essential for Hadoop services to operate. Altair AI Studio and the cluster might not share the same network thus in order to operate properly, adding all node's internal IP address and hostname to the network name services (allowing dynamic configuration), to the Radoop Connection (static, easily shareable configuration) or local hosts file (static configuration) is required. If nodes are accessible via multiple IP addresses or hostnames then those pairs have to be used which are configured for Hadoop services and are used in Service Principals of Kerberos.

The recommended way of providing static host mapping is by editing the Host mapping parameter on the DNS tab inside the Radoop Connection. This way, the configuration is easily distributable and reusable via Altair AI Hub, without requiring local network configuration changes for each user machine. The Host mapping parameter expects IP - hostname entries in a hosts file format. Contents should include all the nodes belonging to the cluster, like in the example below:

On Linux and macOS, hosts file is located at /etc/hosts, on Windows at %system32%\drivers\etc\hosts.

For configuring a Radoop Proxy for a Radoop connection in Altair AI Studio, check the guide Configuring Radoop Proxy Connection. Securing Radoop Proxy communication with SSL is recommended to complete the setup.

Default Ports on a Hadoop cluster

To operate properly, the Radoop client needs access to the following ports on the cluster. To avoid opening all these ports, we recommend to use Radoop Proxy, the secure proxy solution packaged as a .zip file or as a Cloudera Parcel.

| Component | Default Port | Notes |

|---|---|---|

| HDFS NameNode | 8020 or 9000 | Required on the NameNode master node(s). |

| ResourceManager | 8032 or 8050 and 8030, 8031, 8033 | The resource management platform on the ResourceManager master node(s). |

| JobHistory Server Port | 10020 | The port used for accessing information about MapReduce jobs after they terminate. |

| DataNode ports | 50010 and 50020 or 1004 | Access to these ports is required on every slave node. |

| Hive server port | 10000 | The Hive server port on the Hive master node; use this or the Impala port (below). |

| Impala daemon port | 21050 | The Impala daemon port on the node that runs the Impala daemon; use this or the Hive port (above). |

| Application Master | All possible ports | The Application Master uses random ports when binding. You can specify a range of allowed ports for this purpose by setting the yarn.app.mapreduce.am.job.client.port-range property on the Connection Settings dialog. |

| Timeline service | 8190 | This is needed for Hadoop 3. Details can be found on the hadoop parameter yarn.timeline-service.webapp.address. |

| Kerberos | 88 | Optional: If the cluster is Kerberos enabled, it will need to be accessible to the client. (TCP and UDP are both used) |

| Key Management Services | 16000 | Optional: If the cluster utilizes a Key Management Services (KMS), it will need to be accessible to the client, the connection uri info is at the hadoop parameter dfs.encryption.key.provider.uri. |

Radoop automatically sets the version-specific default ports when you select a Hadoop Version in the Manage Radoop Connections window. These defaults can always be changed.