You are viewing the RapidMiner Radoop documentation for version 9.5 - Check here for latest version

Known Hadoop Errors

This section lists errors in the Hadoop components that might effect RapidMiner Radoop process execution. If there is a workaround for an issue, it's also described here.

General Hadoop errors

When using a Radoop Proxy or a SOCKS Proxy, HDFS operations may fail

- The cause is HDFS-3068

- Affects: probably newer Hadoop versions, and is still unresolved

- Error message (during Full Test or file upload):

java.lang.IllegalStateException: Must not use direct buffers with InputStream API - Workaround is to add this property to Advanced Hadoop Parameters:

dfs.client.use.legacy.blockreaderwith a value of true - This should not be an issue as of Radoop 9.5.

Windows client does not work with Linux cluster on Hadoop 2.2 (YARN)

- The cause is YARN-1824

- Affects: Hadoop 2.2 - YARN, with Windows client and Linux cluster

- The import test fails, with the single line in the log:

/bin/bash: /bin/java: No such file or directory - Setting

mapreduce.app-submission.cross-platformtofalsechanges the error message to"No job control" - There is no workaround for this issue, upgrading to Hadoop 2.4+ is recommended.

AccessControlException in log messages

- The cause is HADOOP-11808

- Warning message is

WARN Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS] - This message doesn't affect the execution or the results of the process.

YarnRuntimeException caused by org.codehaus.jackson.JsonParseException: Illegal unquoted character

- This is caused by a bug in recent versions of jobhistory service which may corrupt the history file if it is in text mode.

Error message example:

java.io.IOException: org.apache.hadoop.ipc.RemoteException (org.apache.hadoop.yarn.exceptions.YarnRuntimeException): org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Could not parse history file hdfs://cluster:123/mr-history/done/2019/[...]default-1563.jhist ... Caused by: org.codehaus.jackson.JsonParseException: Illegal unquoted character ((CTRL-CHAR, code 10)): has to be escaped using backslash to be included in name at [Source: org.apache.hadoop.hdfs.client.HdfsDataInputStream@233a323d: org.apache.hadoop.hdfs.DFSInputStream@3daaf42b; line: 19, column: 3999]Solution: Add the following key-value pair to the Advanced Hadoop Parameters list for your connection: key

mapreduce.jobhistory.jhist.formatwith valuebinary.

General Hive errors

SocketTimeoutException: Read timed out is thrown when using Hive-on-Spark

- When Hive-on-Spark is used with Spark Dynamic Allocation disabled, the parsing of a HiveQL command may start a SparkSession, and during that period other requests may fail. See HIVE-17532.

- Affects: Hive with Hive-on-Spark enabled

- Solution: enabling Spark Dynamic Allocation in the Hive service avoids this issue. Note that SocketTimeoutException may still be thrown for other reasons, please consult your Hadoop support in that case.

IllegalMonitorStateException is thrown during process execution

- Probably the cause is HIVE-9598. Usually occurs after long period of inactivity on the Studio interface, or if the HiveServer2 service is changed or restarted.

- Affects: Hive 0.13 (may affect earlier releases), said to be fixed in Hive 0.14

- Error message example:

java.lang.RuntimeException: java.lang.IllegalMonitorStateException

at eu.radoop.datahandler.hive.HiveHandler.runFastScriptTimeout(HiveHandler.java:761)

at eu.radoop.datahandler.hive.HiveHandler.runFastScriptsNoParams(HiveHandler.java:727)

at eu.radoop.datahandler.hive.HiveHandler.runFastScript(HiveHandler.java:654)

at eu.radoop.datahandler.hive.HiveHandler.dropIfExists(HiveHandler.java:1853) ...

Caused by: java.lang.IllegalMonitorStateException

at java.util.concurrent.locks.ReentrantLock$Sync.tryRelease(Unknown Source)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.release(Unknown Source)

at java.util.concurrent.locks.ReentrantLock.unlock(Unknown Source)

at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:239)

- Workaround: Re-opening the process in Studio may solve it. If not, a Studio restart should solve it. If this is a constant issue on your cluster, please upgrade to a Hive version where this issue has been fixed (see ticket above).

Hive job fails with MapredLocalTask error

- Hive may start a so-called local task to perform a JOIN. If there is an error during this local work (typically, an out of memory error), it may only return an error code and not a proper error message.

- Affects: Hive 0.13.0, Hive 1.0.0, Hive 1.1.0 and potentially other versions

- Error message example (return code may differ):

return code 3 from org.apache.hadoop.hive.ql.exec.mr.MapredLocalTask - See full error message in /tmp/hive/ local directory, by default, on the cluster node that performed the task.

- Workaround: check whether the Join operator in your process uses the appropriate keys, so the result set does not explode. If the Join is defined correctly, add the following key-value pair to the Advanced Hive Parameters list for your connection: key

hive.auto.convert.joinwith valuefalse.

Hive job fails with Kryo serializer error

- The cause is probably the same as for HIVE-7711

- Affects: Hive 1.1.0 and potentially other versions

- Error message:

org.apache.hive.com.esotericsoftware.kryo.KryoException: Unable to find class: [...] - Workaround: process re-run might help. Adding the following key-value pair to Advanced Hive Parameters list for the connection prevents this type of error: key

hive.plan.serialization.formatwith valuejavaXML. Please note, that starting from Hive 2.0,kryois the only supported serialization format. See Hive-12609 and Hive Configuration Properties for more information. - Manually installing RapidMiner Radoop functions prevents this type of error.

Hive job fails with NoClassDefFoundError error

- The cause is addressed in HIVE-2573, this patch is included in CDH 5.4.3

- Affects: CDH 5.4.0 to CDH 5.4.2

- Error message:

java.sql.SQLException: java.lang.NoClassDefFoundError: [...] - Error message in HiveServer2 log:

java.lang.RuntimeException: java.lang.NoClassDefFoundError: [...] - Solution: process re-run may help, but an upgrade to CDH 5.4.3 is the permanent solution.

- Manually installing RapidMiner Radoop functions also prevents this type of error.

Hive job fails before completion

- Probably the cause is HIVE-4605

- Affects: Hive 0.13.1 and below

- Error message:

Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask - Error message in HiveServer2 log:

org.apache.hadoop.hive.ql.metadata.HiveException: Unable to rename output from [...] .hive-staging_hive_[...] to: .hive-staging_hive_... - There is no known workaround, please re-run the process preferably without any concurrent read from the same Hive table.

JOIN may lead to NullPointerException in CDH

- The cause may be HIVE-3872, but there are no MAP JOIN hints

- Usually some kind of self join of a complex view leads to this error

- Workaround: use Materialize Data (or Multiply) before the Join operator (in case of multiple Joins, you may have to find out exactly which is the first Join that leads to this error, and materialize right before it)

Number of connections to Zookeeper reaches the maximum allowed

- The cause is that each HiveServer2 "client" creates a new connection to Zookeeper: HIVE-4132, HIVE-6375

- Affects Hive 0.10 and several newer versions.

- After the maximum number (default is 60, Radoop can easily reach that) is reached, HiveServer2 becomes inaccessible, since its connection attempt to Zookeeper fails. A HiveServer2 or Zookeeper restart is required in this case.

- Workaround: increase maxClientCnxns property of Zookeeper, e.g. to 500.

Non latin1 characters may cause encoding issues when used in a filter clause or in a script

- The cause is that RapidMiner Radoop relies heavily on Hive VIEW objects. The code of a VIEW is stored in the Hive Metastore database, which is an arbitrary relational database usually created during the Hadoop cluster install. If this database does not handle the character encoding well, then the RapidMiner Radoop interface will also have issues.

- Affects: Hive Metastore DB created by default MySQL scripts, and it may affect other databases as well

- Workaround: your Hadoop administrator can test if your Hive Metastore database can deal with the desired encoding. A Hive VIEW, created through Beeline, that contains a filter clause with non latin1 characters should return the expected result set when used as a source object in a SELECT query. Please contact your Hadoop support regarding enconding issues with Hive.

Confidence values are missing or predicted label may be shown incorrectly (e.g. after a Discretize operator)

- This issue probably comes up only if Hive Metastore is installed on MySQL relational database with latin1 character set as database default and the label contains special multibyte UTF-8 characters, like the infinity symbol (∞) that a Discretize operator uses.

- Affects: Hive Metastore DB created by default MySQL scripts, and it may affect other databases as well

- Workaround: your Hadoop administrator can test if your Hive Metastore database can deal with the desired encoding. A Hive VIEW, created through Beeline, that contains a filter clause with non latin1 characters should return the expected result set when used as a source object in a SELECT query. Please contact your Hadoop support regarding enconding issues with Hive.

Attribute roles and binominal mappings may be lost when storing in a Hive table with non-default format

- The cause is HIVE-6681

- Probably it is fixed in Hive 0.13

- As the roles and the binominal mappings are stored in column comments, when these are replaced with 'from deserializer", the roles are lost.

PARQUET format may cause Hive to fail

- There are several related issues, one of them is HIVE-6375

- Affects: Hive 0.13 is said to be fixed, but may still have issues like HIVE-6938

- CREATE TABLE AS SELECT statement fails with MapRedTask return code 2; or we get

NullPointerException; or we getArrayIndexOutOfBoundsExceptionbecause of column name issues - Workaround is to use different format. Materialize Data operator may not be enough, as the CTAS statement gives the error.

Hive may hang while parsing large queries

- When submitting large queries to the Hive parser, the execution may stop and later fail or never recover.

- Workaround: This issue usually happens with the Apply Model operator with very large models (like Trees). Set the use general applier parameter to true to avoid the large queries but get the same result.

Unable to cancel certain Hive-on-Spark queries

- The cause is HIVE-13626

- The YARN application can be stuck in RUNNING state on the cluster if the query is canceled immediately after it is submitted.

- This issue can be experienced by chance when a process using Hive-on-Spark is stopped.

- To resolve the situation, one can kill the job manually.

Starting a Hive-on-Spark job fails due to timeout

- The

hive.spark.client.server.connect.timeoutproperty is set to 90000ms by default. This may be short for a Hive-on-Spark job to start, especially when multiple jobs are waiting for execution (e.g. parallel execution of processes). - A dedicated error message explains this issue.

- The property value can be modified only in

hive-site.xmlcluster configuration file.

SemanticException View xxx is corresponding to LIMIT, rather than a SelectOperator

- The cause is HIVE-17415

- Affects: Hive 2.3 - 2.4, appears to be fixed in Hive version 2.5 and above

- User is attempting to create a VIEW with a LIMIT parameter and this is not allowed in the hive version

- This is most often seen when using the Filter Example Range operator or when a LIMIT is specified in the Hive Script operator

General Impala errors

Impala may return empty results when DISTINCT or COUNT(DISTINCT ..) expressions are used

- There are a lot of similar bug tickets.

- Seems to only come up when an INSERT is used (Store in Hive operator). The DISTINCT expression may be used in Aggregate or Remove Duplicates operators.

- A related issue: Impala still does not support multiple COUNT(DISTINCT ..) expressions IMPALA-110

General Spark errors

Spark Script with Hive access fails with "GSS initiate failed" when user impersonation is enabled

- When using Spark 1.5 or Spark 1.6 versions and user impersonation in the Radoop connection, a Spark Script operator that has enable Hive access set to true may fail with security error.

- Cause is SPARK-13478.

- Workaround is to update Spark on the cluster.

Spark 2.0.1 is unable to create database

- On Spark 2.0.1, the execution fails with the following exception:

"Unable to create database default as failed to create its directory hdfs://"... - Cause is SPARK-17810.

- Affects only Spark 2.0.1. Please use Spark 2.0.0 or upgrade to Spark 2.0.2 or later.

- Workaround is to add

spark.sql.warehouse.diras an Advanced Spark Parameter with a path that begins with "file:/". This is not expected to work on Windows.

Spark job may fail with relatively large ORC input data

- Error message is

"Size exceeds Integer.MAX_VALUE" - The cause is SPARK-1476

- Workaround is using Text input format. The bug may occur with Text format too if the HDFS blocks are large.

Reading the output of Spark Script may fail for older Hive versions if the DataFrame contains too many null values

- The Spark job succeeds, but reading the output parquet table fails with NullPointerException.

- Affects Hive 1.1 and below. The cause is PARQUET-136.

- Workaround is using the

fillna()function on the output DataFrame (Python API, R API)

Exception in Spark job may not fail the process if no output connection is defined.

- An exception occured in the Spark script but the Spark job succeeds. If the operator has no output, the process succeeds.

- Cause is SPARK-7736 and SPARK-10851.

- Affects Spark 1.5.x. Fixed in Spark 1.5.1 for Python, Spark 1.6.0 for R. The exception can be checked in the ApplicationMaster logs on the Resource Manager web interface.

- Workaround is upgrading to Spark 1.5.1/1.6.0 or making a dummy output connection and returning a (small) dummy dataset.

Spark Error InvalidAuxServiceException: The auxService:spark_shuffle does not exist

- Typically caused when a Spark Resource Allocation Policy for a connection is selected to be Dynamic, but the Hadoop cluster that Spark is running on is not setup for Dynamic Resource Allocation.

- Either adjust Hadoop cluster to have Dynamic Resource Allocation accordingly or change Spark Resource Allocation Policy for the connection in the connection editor.

Spark Error java.lang.StackOverflowError on executor side

This is a general error, which can be caused by multiple problems, but there is a known Spark issue among the possible causes. It can be identified by a very large Java deserialization stack trace, with lots of ObjectInputStream calls. Random Forest and Decision Tree are known to be affected, but you might also experience this problem with other Spark operators as well. Example error snippet:

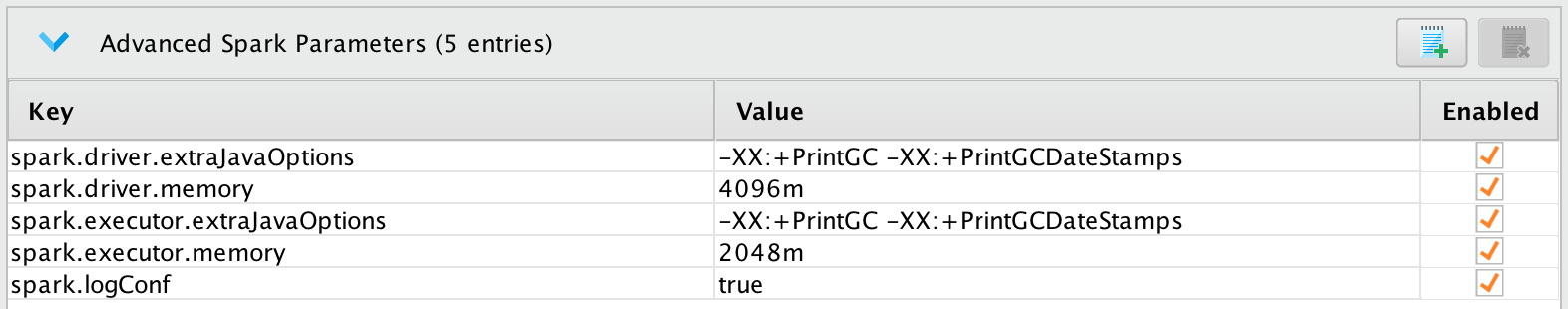

java.lang.StackOverflowError at java.io.ObjectInputStream$BlockDataInputStream.peekByte(ObjectInputStream.java:2956) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1736) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2040) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2285) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2285) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2209) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2067) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1571) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) at scala.collection.immutable.List$SerializationProxy.readObject(List.scala:479) at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498)- The issue is caused by the deserialization process exceeding the available stack size inside the executor process. A possible solution to the problem is increasing the executor stack size, by adding the following key-value pair to Advanced Spark Parameters

spark.executor.extraJavaOptionswith the value of-Xss4m, and making sure thatspark.driver.extraJavaOptionshas the same value. The -Xss value is a parameter used to set the the java runtime thread-stack size, see basic java documents.

Container exited with a non-zero exit code 143

Symptom is that Spark Job logs requested from yarn shows the following exception:

INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED [...] Exit status: 143. Diagnostics: Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Killed by external signalGenerally speaking YARN killed the application because it tried to expand on YARN resources beyond its limits which can be either vCore, memory, or other resource types.

- Most commonly appears when Radoop Connection is set to use Spark Dynamic Resource Allocation and memory settings is either left on cluster default (which could be too small to execute anything meaningful)

- Increasing the driver's available memory usually solves the issue. Further remedy would be enable GC logging of Spark job and start fine tuning Spark job submission related resource settings in Radoop Connection -> Advanced Spark Parameters. Good candidates for memory fine tune would be

spark.[driver|executor].[cores|memory|memoryOverhead]. Please check Spark documentation for further details (please refer to the version available on your cluster as defaults may be different)

Spark job fails on java.lang.IllegalArgumentException after upgrading Spark version

- The following on the container logs is caused by the Spark behavioural change on parsing driver and executor memory settings. In contrast to earlier versions which treated pure numbers as MiB, recent versions treat pure numbers as bytes.

Error message example:

java.lang.IllegalArgumentException: Executor memory 3000 must be at least 471859200. Please increase executor memory using the --executor-memory option or spark.executor.memory in Spark configuration.It is recommended to be explicit about the given amounts and always use a unit suffix (eg "m" meaning megabytes) when performing Spark memory related fine tuning in Radoop Connection -> Advanced Spark Params.

General MapR errors

Support for MapR clusters by RapidMiner Radoop has been deprecated.

Error util.Shell: Failed to locate the winutils binary in the hadoop binary path

Or

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

- Both these errors indicate that the environment variable

HADOOP_HOMEis not set correctly. - Most often seen on Windows system, when the

HADOOP_HOMEenvironment variable is not set. - Set the

HADOOP_HOMEsystem-wide environment variable to the location of the hadoop directory. A normal MapR install this will be%MAPR_HOME%/hadoop/hadoop-x.y.z, wherex.y.zis the hadoop version.

java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Ljava/lang/String;I)Z

- Error message indicates, unable to find approrate binary libraries.

- Most often seen in Windows system, when the

PATHdoes not contain the binaries located at%HADOOP_HOME%\bin. - Workaround is to check that the

HADOOP_HOMEsystem-wide environment variable is set correctly, and append%HADOOP_BIN%\binto the system-wide environment variablePATH.

java.lang.ClassCastException: com.mapr.fs.MapRFileSystem cannot be cast to org.apache.hadoop.hdfs.DistributedFileSystem

- Error indicates the hive table LOCATION was specified by hdfs://, the expected value is maprfs://

- This most often occurs when using the Store in Hive operator with a MapR 6.1 cluster, when the advanced option checkbox of external table is checked and the location textfield is specified with

hdfs:// - Workaround is to specify a location on the Store in Hive operator prefixed with

maprfs://and nothdfs://